The ICC rankings are a much-maligned attempt to derive some sort of meaning from the international cricket schedule. However, it strikes us that they no longer answer the question for which they were devised.

Who’s the best in the world? We now have three different answers: the best Test side, the best one-day international (ODI) side and the best Twenty20 international (T20I) side.

This is all well and good – cricket is a sport more or less defined by the varied challenges confronted by its players. However, the time pressures resulting from this weighty fixture list has left each of the formats – and therefore each set of rankings – subtly diminished.

Take England for example

In recent years there has been a change of priorities, such that England players with Test potential are now less likely to be groomed for that format if they are already key members of the limited overs squads. Given a choice between gaining first-class experience or playing the IPL or international short format cricket, the former generally loses out.

The upshot has been a stronger 50-over side and a stronger 20-over side, but a Test team shorn of at least a couple of players who could have made the grade given more long format experience.

The Test team was recently defeated by Australia, whose coach subsequently pointed to tiredness as a reason why many of his players underperformed in the one-day series that followed.

Australia prioritised the Tests and won them; England prioritised the one-dayers and won them. Similar stories play out on every single modern tour. Every nation is to some degree compromised by the schedule and results are always to some degree shaped by the participants’ respective priorities.

An overview

To get a clear picture of which nations are good at cricket and which are shit at cricket, it’s necessary to look at the broader picture.

This is how the idea of the format-spanning points system came about. It didn’t catch on, but it was basically an attempt to draw things together and make everyone care about all three formats.

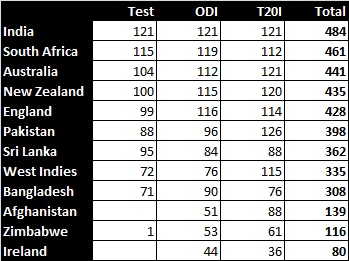

We thought it would be instructive to take a similar approach and combine the ICC rankings.

Same as with the points system, Tests are worth double because it’s a two-innings game.

How did that pan out then?

Well it ended up with everyone in almost exactly the same order as in the Test rankings.

The conclusion we draw from this is that combined rankings actually work quite well.

Maybe I am being dense and missing some irony here, but if tests count double, and thus contribute half the weighting to the rankings, it is almost inevitable that the order will be pretty similar to the test order, unless there were some pretty drastic swings in the other two formats. The conclusion is more properly that loaded dice give more predicable results.

Double points for Tests is not quite so influential as if we had assigned points according to ranking position (10 for first, 9 for second, 8 for third etc).

Using the ICC’s ranking points, a team could be ahead by two or three points in Tests and a country mile behind in one of the other formats and that would be enough to see it drop several places overall.

The main thing shaping these combined rankings is that the order of the teams in Tests and ODIs is actually very similar.

I don’t understand the rationale for doubling Test points. Why doubling? Why not x5 (number of days) or x10-ish (number of overs)? Seems to me a Test is just a contest, as are ODI and T20. A win in any format is a win. They should all take same weight?

Yes, it is indeed just a contest, same as the others, but there seems to be an acceptance that Test cricket is somehow a greater endeavour and warrants greater weight. Perhaps the fact that there is an additional possible outcome (a draw, as opposed to a tie) makes a win more valuable.

We suppose the reasoning for doubling points is that Test cricket is a two-innings format, whereas the other two are one innings formats.

Anyone thought to ask Duckworth, Lewis or the other one for an incontrovertible answer?

David Kendix is the rankings boffin, not Duckworth nor Lewis.

I’m pretty sure he would say that there is no incontrovertible answer; the weighting would always be (yet another) matter for controversey/debate, as indeed is the rate of decay/cut off date already used for older matches in the rankings.

If I remember and if I get a suitable moment to ask him, I shall raise the matter with him when I next see him and report back.

Ever realized it’s just about home vs away in Test cricket? Which team is that good at winning away series these days? Not many.

Yes, any team half decent at it tends to end up top.

England and Australia seem to be realising that one-sided contests between them aren’t for the greater good and seem keen to give the touring team better preparation. Should it happen it’ll be interesting to see whether the same philosophy is applied to other series.

USE WEIGHTED AVERAGE CONCEPT AND ASSIGN DIFFERENT WEIGHT TO DIFFERENT FORMATS

Can you shush it down a bit? Some of us come here to get some sleep.

Would we be interested in which country is best at tennis? Bear in mind this would have to involve lawn, table and that strange version Ged plays.

Similarly, a cue sports all-format league would have to encompass snooker, pool, billiards and dick snooker.

‘That’s my cue’ has a whole new meaning…

Ah, dick snooker. Simpler times.